Conversational Goal-Conflict Explanations in Planning via Multi-Agent LLMs

Published:

Summary

We formalize and implement an LLM-based conversational explanation interface for planning.

Contribution

This is my first first-author paper!

Abstract

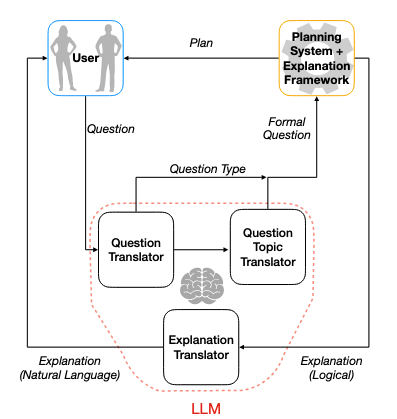

When automating plan generation for a real-world sequential decision problem, the goal is often not to replace the human planner, but to facilitate the tedious work. In an iterative pro- cess, the human’s role is to guide the planner according to their preferences and expert experience. Explanations that re- spond to users’ questions are crucial to increase trust in the system and improve understanding of the sample solutions. To enable natural interaction with such a system, we present an explanation framework agnostic architecture for interac- tive natural language explanations that enables user and con- text dependent interactions. We propose conversational inter- faces based on Large Language Models (LLMs) and instan- tiate the explanation framework with goal-conflict explana- tions. As a basis for future evaluation, we provide a tool for domain experts that implements our interactive natural lan- guage explanation architecture.

Click here to download the paper

Recommended citation:

@inproceedings{

fouilhe2025conversational,

title={Conversational Goal-Conflict Explanations in Planning via Multi-Agent {LLM}s},

author={Guilhem Fouilh{\'e}, Rebecca Eifler, Sylvie Thiebaux, Nicholas Asher },

booktitle={AAAI 2025 Workshop LM4Plan},

year={2025},

url={https://openreview.net/forum?id=Ys875Rgl4o}

}