Conversational Goal-Conflict Explanations in Planning via Multi-Agent LLMs

Published:

Summary

We formalize and implement an LLM-based conversational explanation interface for planning, and test it with a user study.

Abstract

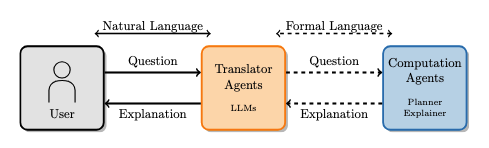

When automating plan generation for a real-world sequential decision problem, the goal is often not to replace the human planner, but to facilitate an iterative reasoning and elicitation process, where the human’s role is to guide the planner according to their preferences and expertise. In this context, explanations that respond to users’ questions are crucial to improve their understanding of potential solutions and increase their trust in the system. To enable natural interaction with such a system, we present a multi-agent Large Language Model (LLM) architecture that is agnostic to the explanation framework and enables user- and context-dependent interactive explanations. We also describe an instantiation of this framework for goal-conflict explanations, which we use to conduct a pilot case study comparing the LLM-powered interaction with a more conventional template-based explanation interface.